| [1] |

张勤,任海林,胡嘉辉 .基于信息融合的智能推料机器人设计与试验[J].农业机械学报,2022,54(6):78-84,93.

|

|

ZHANG Qin, REN Hailin, HU Jiahui .Design and experiment of intelligent feed-pushing robot based on information fusion[J].Transactions of the Chinese Society for Agricultural Machinery,2022,54(6):78-84,93.

|

| [2] |

张勤,胡嘉辉,任海林 .饲喂辅助机器人的智能推料方法与试验研究[J].华南理工大学学报(自然科学版),2022,50(6):111-120.

|

|

ZHANG Qin, HU Jiahui, REN Hailin .Intelligent pushing method and experiment of feeding assistant robot[J].Journal of South China University of Technology(Natural Science Edition),2022,50(6):111-120.

|

| [3] |

ALBRIGHT J L .Feeding behavior of dairy cattle[J].Journal of Dairy Science,1993,76(2):485-498.

|

| [4] |

STANKOVSKI S, OSTOJIC G, SENK I,et al .Dairy cow monitoring by RFID[J].Scientia Agricola,2012,69:75-80.

|

| [5] |

PORTO S M C, ARCIDIACONO C, GIUMMARRA A,et al .Localisation and identification performances of a real-time location system based on ultra wide band technology for monitoring and tracking dairy cow beha-viour in a semi-open free-stall barn[J].Computers and Electronics in Agriculture,2014,108:221-229.

|

| [6] |

姜威,郭庆,司陈,等 .一种用于奶牛定位的装置和方法:CN201310228400.3[P].2013-09-18.

|

| [7] |

DHANYA V G, SUBEESH A, KUSHWAHA N L,et al .Deep learning based computer vision approaches for smart agricultural applications[J].Artificial Intelligence in Agriculture,2022,6:211-229.

|

| [8] |

DIWAN T, ANIRUDH G, TEMBHURNE J V .Object detection using YOLO:challenges,architectural succe-ssors,datasets and applications[J].Multimedia Tools and Applications,2023,82(6):9243-9275.

|

| [9] |

KUAN C Y, TSAI Y C, HSU J T,et al .An imaging system based on deep learning for monitoring the feeding behavior of dairy cows[C]∥ Proceedings of 2019 ASABE Annual International Meeting.Boston:American Society of Agricultural and Biological Engineers,2019:1/1-9.

|

| [10] |

JIANG B, WU Q, YIN X,et al .FLYOLOv3 deep learning for key parts of dairy cow body detection[J].Computers and Electronics in Agriculture,2019,166:104982/1-8.

|

| [11] |

BEZEN R, EDAN Y, HALACHMI I .Computer vision system for measuring individual cow feed intake using RGB-D camera and deep learning algorithms[J].Computers and Electronics in Agriculture,2020,172:105345/1-11.

|

| [12] |

WANG J, ZHANG X, GAO G,et al .Open pose mask R-CNN network for individual cattle recognition[J].IEEE Access,2023,11:113752-113768.

|

| [13] |

LI X, GAO R, LI Q,et al .Multi-target feeding-behavior recognition method for cows based on improved RefineMask[J].Sensors,2024,24(10):2975/1-19.

|

| [14] |

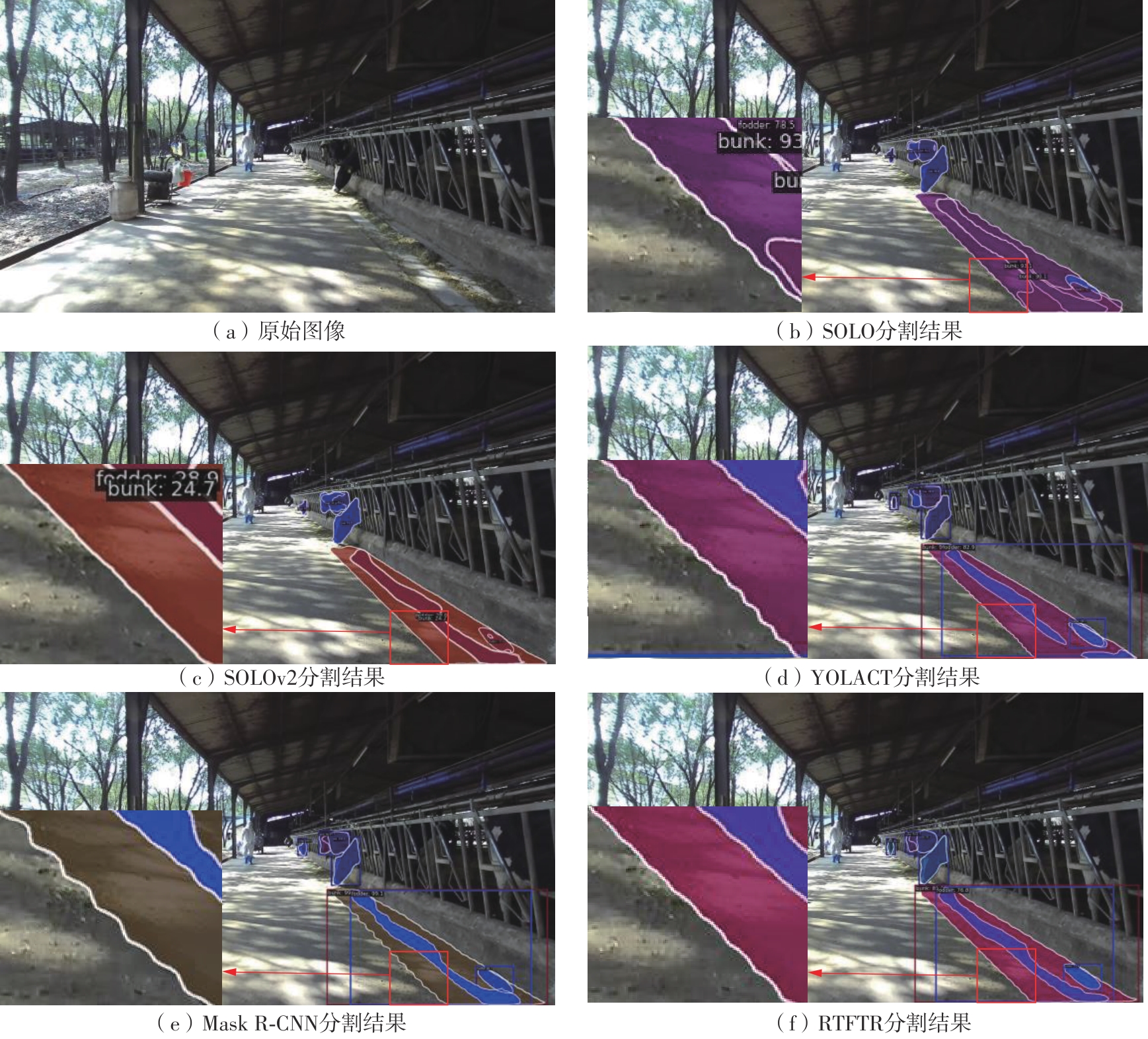

BOLYA D, ZHOU C, XIAO F,et al .YOLACT:real-time instance segmentation[C]∥ Proceedings of 2019 IEEE/CVF International Conference on Computer Vision.Seoul:IEEE,2019:9156-9165.

|

| [15] |

YANG G, LI R, ZHANG S,et al .Extracting cow point clouds from multi-view RGB images with an improved YOLACT++ instance segmentation[J].Expert Systems with Applications,2023,230:120730/1-14.

|

| [16] |

KIRILLOV A, MINTUN E, RAVI N,et al .Segment anything[C]∥ Proceedings of 2023 IEEE/CVF International Conference on Computer Vision.Paris:IEEE,2023:3992-4003.

|

| [17] |

ZHANG J .Research on derived tasks and realistic applications of segment anything model:a literature review[J].Frontiers in Computing and Intelligent Systems,2023,5(2):116-119.

|

| [18] |

YANG X, DAI H, WU Z,et al .An innovative segment anything model for precision poultry monitoring [J].Computers and Electronics in Agriculture,2024,222:109045/1-12.

|

| [19] |

LI Q, ZHAO J, BAI D,et al .Cattle body size measurement system based on dual-position cameras[C]∥ Proceedings of 2024 International Conference on Computer Vision and Deep Learning.Changsha:ACM,2024:47/1-6.

|

| [20] |

CHEN K, LIU C, CHEN H,et al .RSPrompter:learning to prompt for remote sensing instance segmentation based on visual foundation model[J].IEEE Tran-sactions on Geoscience and Remote Sensing,2024,62:4701117/1-17.

|

| [21] |

DOSOVITSKIY A, BEYER L, KOLESNIKOV A,et al .An image is worth 16 × 16 words:transformers for image recognition at scale[C]∥ Proceedings of the 9th International Conference on Learning Representations.[S.l.]:OpenReview.net,2021:1-21.

|

| [22] |

XIONG Y, VARADARAJAN B, WU L,et al .EfficientSAM:leveraged masked image pretraining for efficient segment anything[C]∥ Proceedings of 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition.Seattle:IEEE,2024:16111-16121.

|

| [23] |

叶峰,陈彪,赖乙宗 .基于特征空间嵌入的对比知识蒸馏算法[J].华南理工大学学报(自然科学版),2023,51(5):13-23.

|

|

YE Feng, CHEN Biao, LAI Yizong .Contrastive knowledge distillation method based on feature space embedding[J].Journal of South China University of Technology (Natural Science Edition),2023,51(5):13-23.

|

| [24] |

KE L, YE M, DANELLJAN M,et al .Segment anything in high quality[C]∥ Advances in Neural Information Processing Systems 36:37th Conference on Neural Information Processing Systems.San Diego:Neural Information Processing Systems Foundation,Inc.,2023:29914-29934.

|

| [25] |

LYU C, ZHANG W, HUANG H,et al .RTMDet:an empirical study of designing real-time object detectors [EB/OL].(2022-12-16)[2024-11-25]..

|

| [26] |

LI Y, MAO H, GIRSHICK R,et al .Exploring plain vision transformer backbones for object detection[C]∥Proceedings of the 17th European Conference on Computer Vision.Tel Aviv:Springer,2022:280-296.

|

| [27] |

TIAN Y, SU D, LAURIA S,et al .Recent advances on loss functions in deep learning for computer vision [J].Neurocomputing,2022,497:129-158.

|

| [28] |

HE K, GKIOXARI G, DOLLÁR P,et al .Mask R-CNN[C]∥ Proceedings of 2017 IEEE International Conference on Computer Vision.Venice:IEEE,2017:2961-2969.

|

| [29] |

WANG X, KONG T, SHEN C,et al .SOLO: segmenting objects by locations[C]∥ Proceedings of the 16th European Conference on Computer Vision.Glasgow:Springer,2020:649-665.

|

| [30] |

WANG X, ZHANG R, KONG T,et al .SOLOv2:dynamic and fast instance segmentation[C]∥ Advances in Neural Information Processing Systems 33:34th Conference on Neural Information Processing Systems.San Diego:Neural Information Processing Systems Foundation,Inc., 2020:17721-17732.

|