Journal of South China University of Technology(Natural Science) >

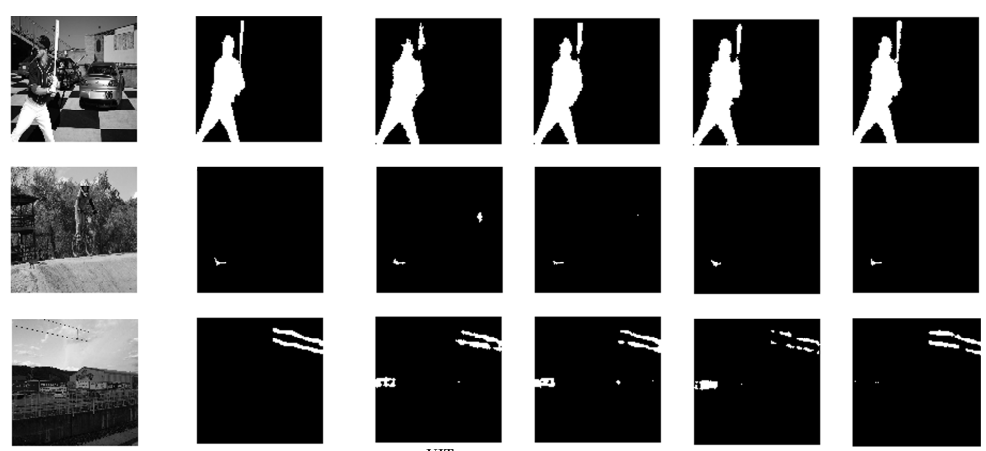

Image Tampering Localization Based on Visual Multi-Scale Transformer

Received date: 2021-09-17

Revised date: 2021-10-27

Online published: 2021-11-08

Supported by

the National Social Science Foundation Key Project of China;the Major Program of the Zhongshan Industry-Academia-Research Fund

LU Lu , ZHONG Wen-Yu , WU Xiao-Kun . Image Tampering Localization Based on Visual Multi-Scale Transformer[J]. Journal of South China University of Technology(Natural Science), 2022 , 50(6) : 10 -18 . DOI: 10.12141/j.issn.1000-565X.210603

/

| 〈 |

|

〉 |