收稿日期: 2024-12-30

网络出版日期: 2025-04-27

基金资助

黑龙江省重点研发计划项目(JD22A014);国家车辆事故深度调查体系项目(NAIS-ZL-ZHGL-2020018);国家自然科学基金项目(62173107)

Lane Line Detection Algorithm Based on Deep Learning

Received date: 2024-12-30

Online published: 2025-04-27

Supported by

the Key R & D Program of Heilongjiang Province(JD22A014);the National Natural Science Foundation of China(62173107)

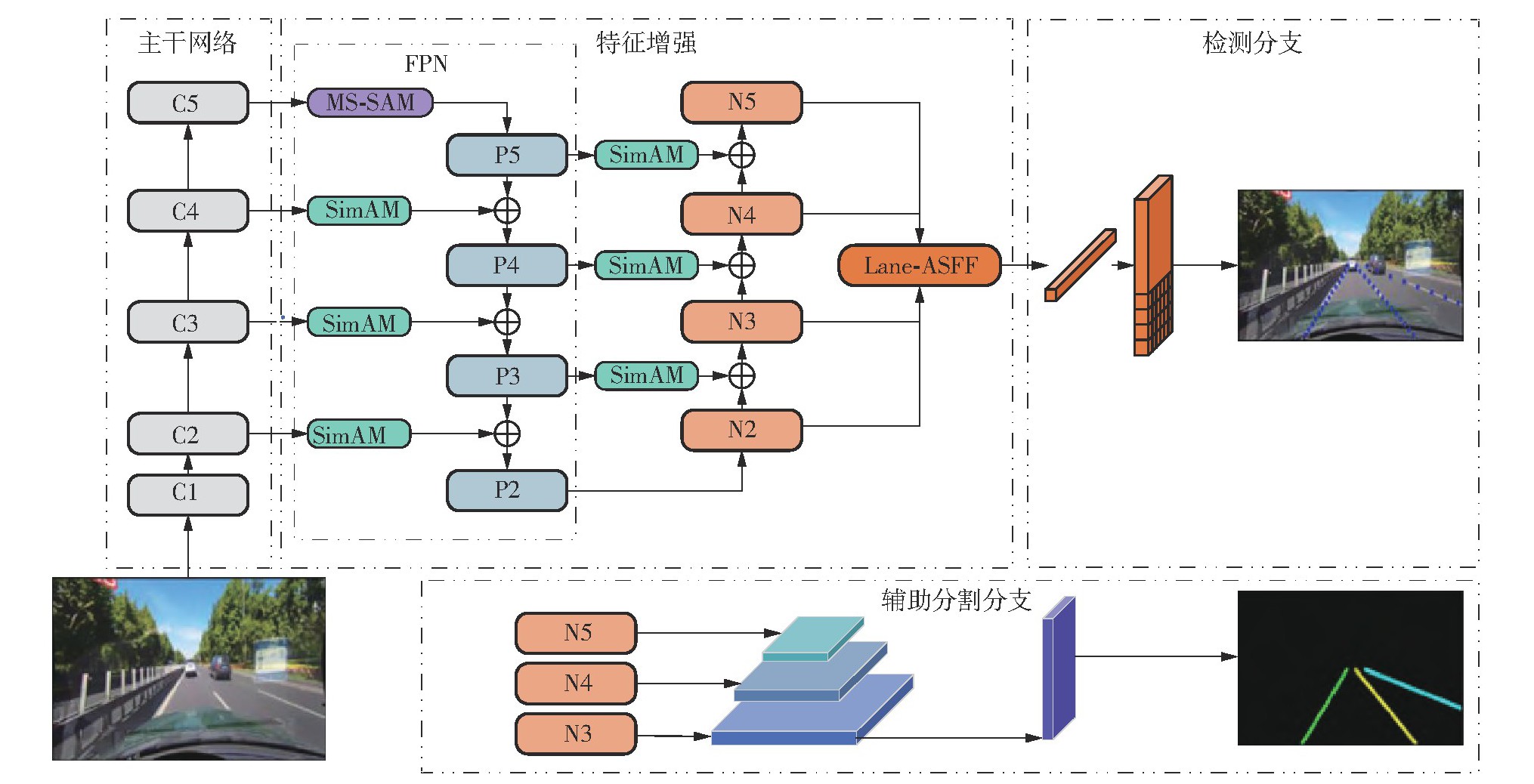

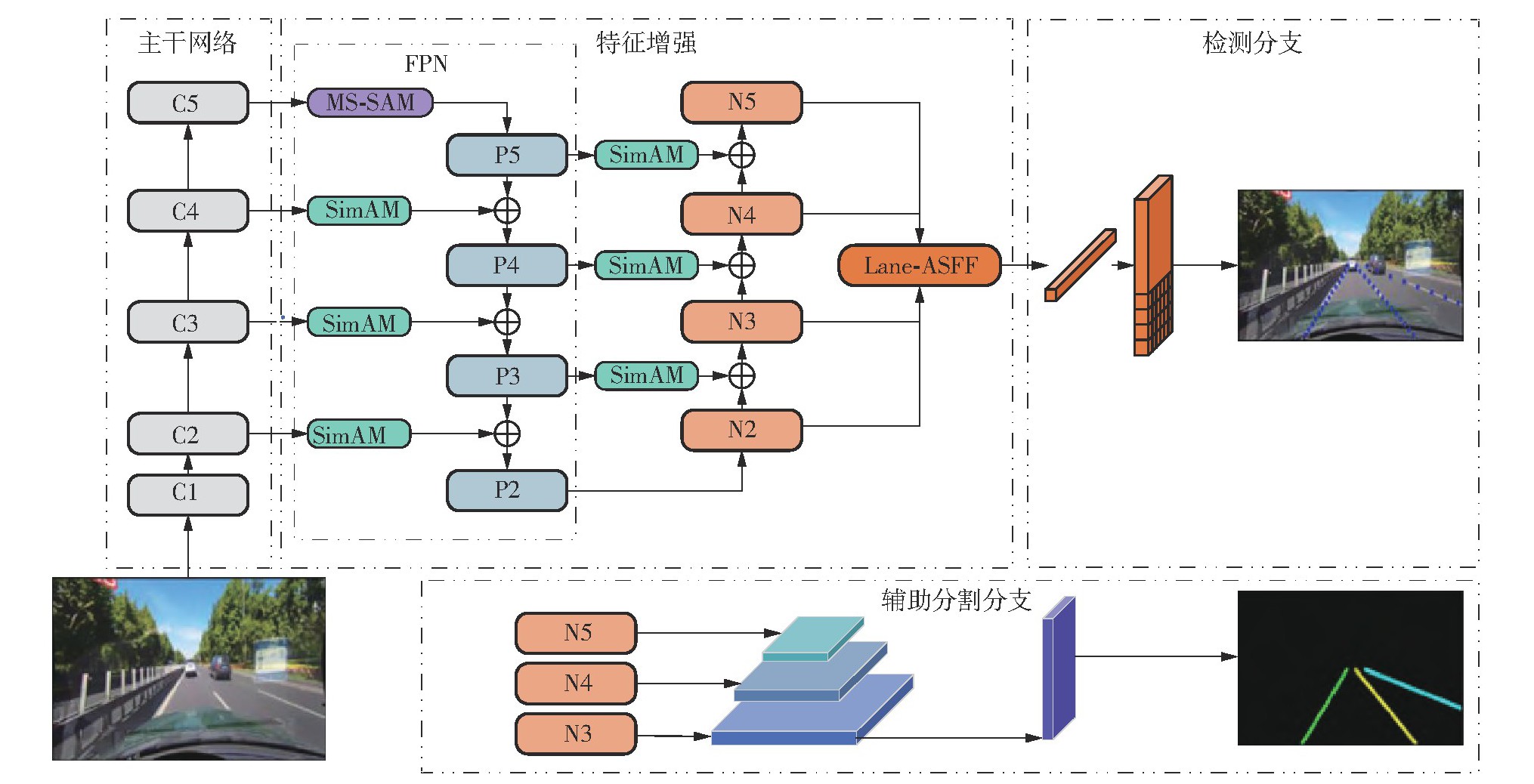

针对智能车辆在复杂场景下的车道线检测准确性问题,该文提出了一种融合多尺度空间注意力机制和路径聚合网络(PANet)的车道线检测算法。该算法首先引入行锚框UFLD车道线检测模型,并结合深度可分离卷积的特征金字塔增强模块PANet,以实现图像的多尺度特征提取;接着,网络框架中设计多尺度空间注意力模块,且引入SimAM轻量级注意力机制,以增强对目标特征的聚焦能力;然后,设计自适应特征融合模块,通过智能调整不同尺度特征图的融合权重,对PANet输出的特征图进行跨尺度融合,以提升网络对复杂特征的提取能力。在TuSimple数据集上的实验结果表明,所提算法的检测精度为96.84%,较原算法提升了1.02个百分点,优于传统的主流算法;在CULane数据集上的实验结果表明,所提算法的F1值为72.74%,优于传统的主流算法,较原算法提升了4.34个百分点,尤其在强光和阴影等极端场景下的检测性能提升显著,说明所提算法在复杂场景下具有优异的检测能力;实时性测试结果显示,所提算法的推理速度达118.0 f/s,满足智能车辆的实时性需求。

关键词: 车道线检测; 深度学习; 多尺度空间注意力机制; 自适应特征融合

岳永恒 , 赵志浩 . 基于深度学习的车道线检测算法[J]. 华南理工大学学报(自然科学版), 2025 , 53(9) : 22 -30 . DOI: 10.12141/j.issn.1000-565X.240609

Aiming at the problem of lane detection accuracy of intelligent vehicles in complex scenes, this paper proposed a lane line detection algorithm which incorporates a multi-scale spatial attention mechanism and a path aggregation network (PANet). The algorithm first introduced the pre-anchored frame UFLD lane detection model and incorporated a feature pyramid enhancement module PANet with depthwise separable convolution to achieve multi-scale feature extraction of images. Next, a multi-scale spatial attention module was designed in the network framework and a SimAM lightweight attention mechanism was introduced to enhance the focusing ability on target features. Then, an adaptive feature fusion module was designed to perform cross-scale fusion of feature maps output from PANet by intelligently adjusting the fusion weights of feature maps at different scales, so as to effectively enhance the network’s ability to extract complex features. Finally, the application of TuSimple dataset detection proves that the proposed algorithm achieves a detection accuracy of 96.84%, representing a 1.02 percentage point improvement over the original algorithm, and outperforms conventional mainstream algorithms. Experimental results on the CULane dataset demonstrate that the proposed algorithm achieves an F1 score of 72.74%, outperfor-ming conventional mainstream methods with a 4.34 percentage point improvement over the baseline. Notably, it exhibits significant performance gains in extreme scenarios (e.g., strong illumination and shadows), confirming its superior detection capability in complex environments. In addition, the real-time test shows that the model infe-rence speed reaches 118 f/s, which meets the real-time demand of intelligent vehicles.

| [1] | HE Y, WANG H, ZHANG B .Color-based road detection in urban traffic scenes [J].IEEE Transactions on Intelligent Transportation Systems,2004,5(4):309-318. |

| [2] | YOO H, YANG U, SOHN K .Gradient-enhancing conversion for illumination-robust lane detection[J].IEEE Transactions on Intelligent Transportation Systems,2013,14(3):1083-1094. |

| [3] | GAIKWAD V, LOKHANDE S .Lane departure identification for advanced driver assistance [J].IEEE Tran-sactions on Intelligent Transportation Systems,2014,16(2):910-918. |

| [4] | NIU J, LU J, XU M,et al .Robust lane detection using two-stage feature extraction with curve fitting [J].Pattern Recognition,2016,59:225-233. |

| [5] | PAN X, SHI J, LUO P,et al .Spatial as deep:spatial CNN for traffic scene understanding[C]∥ Procee-dings of theThirty-Second AAAI Conference on Artificial Intelligence.New Orleans:AAAI,2018:7276-7283. |

| [6] | HOU Y, MA Z, LIU C,et al .Learning lightweight lane detection CNNs by self attention distillation[C]∥ Proceedings of 2019 IEEE/CVF International Conference on Computer Vision.Seoul:IEEE,2019:1013-1021. |

| [7] | ZHAO J, QIU Z, HU H,et al .HWLane:HW-Transformer for lane detection[J].IEEE Transactions on Intelligent Transportation Systems,2024,25(8):9321-9331. |

| [8] | NEVEN D, DE BRABANDERE B, GEORGOULIS S,et al .Towards end-to-end lane detection:an instance segmentation approach[C]∥ Proceedings of 2018 IEEE Intelligent Vehicles Symposium.Changshu:IEEE,2018:286-291. |

| [9] | WEN Y, YIN Y, RAN H .FlipNet:an attention-enhanced hierarchical feature flip fusion network for lane detection[J].IEEE Transactions on Intelligent Transportation Systems,2024,25(8):8741-8750. |

| [10] | TABELINI L, BERRIEL R, PAIXAO T M,et al .PolyLaneNet:lane estimation via deep polynomial regression[C]∥ Proceedings of 2020 the 25th Inter-national Conference on Pattern Recognition.Milan:IEEE,2021: 6150-6156. |

| [11] | LIU R, YUAN Z, LIU T,et al .End-to-end lane shape prediction with transformers[C]∥ Proceedings of 2021 IEEE Winter Conference on Applications of Computer Vision.Waikoloa:IEEE,2021:3693-3701. |

| [12] | FENG Z, GUO S, TAN X,et al .Rethinking efficient lane detection via curve modeling[C]∥ Procee-dings of 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition.New Orleans:IEEE,2022:17041-17049. |

| [13] | YOO S, LEE H S, MYEONG H,et al .End-to-end lane marker detection via row-wise classification[C]∥ Proceedings of 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops.Sea-ttle:IEEE,2020:4335-4343. |

| [14] | QIN Z, WANG H, LI X .Ultra fast structure-aware deep lane detection[C]∥ Proceedings of the 16th European Conference on Computer Vision.Glasgow:Springer,2020:276-291. |

| [15] | LIU L, CHEN X, ZHU S,et al .CondLaneNet:a top-to-down lane detection framework based on conditional convolution[C]∥ Proceedings of 2021 IEEE/CVF International Conference on Computer Vision.Montreal:IEEE,2021:3753-3762. |

| [16] | LIU S, QI L, QIN H,et al .Path aggregation network for instance segmentation[C]∥ Proceedings of 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition.Salt Lake City:IEEE,2018:8759-8768. |

| [17] | YANG L, ZHANG R Y, LI L,et al .SimAM:a simple,parameter-free attention module for convolutional neural networks[C]∥ Proceedings of the 38th International Conference on Machine Learning.Wi-lliamstown:ML Research Press,2021:11863-11874. |

| [18] | HE K, ZHANG X, REN S,et al .Deep residual learning for image recognition[C]∥ Proceedings of 2016 IEEE Conference on Computer Vision and Pattern Recognition.Las Vegas:IEEE,2016:770-778. |

| [19] | LIN T Y, DOLLáR P, GIRSHICK R,et al .Feature pyramid networks for object detection[C]∥ Procee-dings of IEEE Conference on Computer Vision and Pattern Recognition.Honolulu:IEEE,2017:936-9442125. |

| [20] | CHOLLET F .Xception:deep learning with depthwise separable convolutions[C]∥ Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Re-cognition.Honolulu:IEEE,2017:1800-1807. |

| [21] | LIU S, HUANG D, WANG Y .Learning spatial fusion for single-shot object detection[EB/OL].(2019-11-21)[2024-12-10].. |

| [22] | CHEN L C, PAPANDREOU G, SCHROFF F,et al .Rethinking atrous convolution for semantic image segmentation[EB/OL].(2017-12-05)[2024-12-10].. |

| [23] | WANG Q, WU B, ZHU P,et al .ECA-Net:efficient channel attention for deep convolutional neural networks[C]∥ Proceedings of the IEEE/CVF confe-rence on computer vision and pattern recognition.Seattle:IEEE,2020:11531-11539. |

| [24] | TuSimple .TuSimple lane detection benchmark[EB/OL].(2017-7-17)[2024-12-10].. |

| [25] | TABELINI L, BERRIEL R, PAIX?O T M,et al .Keep your eyes on the lane:real-time attention-guided lane detection[C]∥ Proceedings of 2021 IEEE/CVF COnference on Computer Vision and Pattern Recognition.Nashville:IEEE,2021:294-302. |

| [26] | GHAFOORIAN M, NUGTEREN C, BAKA N,et al .EL-GAN:embedding loss driven generative adversarial networks for lane detection[C]∥ Proceedings of the 15th European Conference on Computer Vision Workshops.Munich:Springer,2018:256-272. |

| [27] | XU H, WANG S, CAI X,et al .CurveLane-NAS:unifying lane-sensitive architecture search and adaptive point blending[C]∥ Proceedings of the 16th European Conference on Computer Vision.Glasgow:Springer,2020:689-704. |

| [28] | WANG J, MA Y, HUANG S,et al .A keypoint-based global association network for lane detection [C]∥ Proceedings of 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition.New Orleans:IEEE,2022:1382-1391. |

| [29] | HONDA H, UCHIDA Y .CLRerNet:improving confidence of lane detection with LaneIoU[C]∥ Procee-dings of 2024 IEEE/CVF Winter Conference on Applications of Computer Vision.Waikoloa:IEEE,2024:1165-1174. |

/

| 〈 |

|

〉 |