收稿日期: 2023-02-02

网络出版日期: 2023-04-10

基金资助

国家自然科学基金资助项目(61936003);广东省基础与应用基础研究基金资助项目(2019B151502057)

A Self-Supervised Pre-Training Method for Chinese Spelling Correction

Received date: 2023-02-02

Online published: 2023-04-10

Supported by

the National Natural Science Foundation of China(61936003);Guangdong Basic and Applied Basic Research Foundation(2019B151502057)

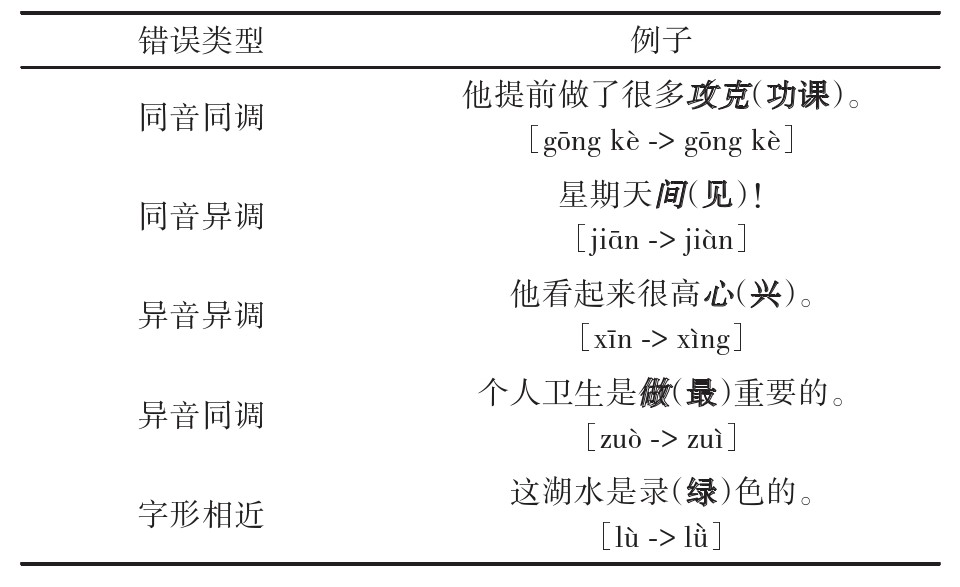

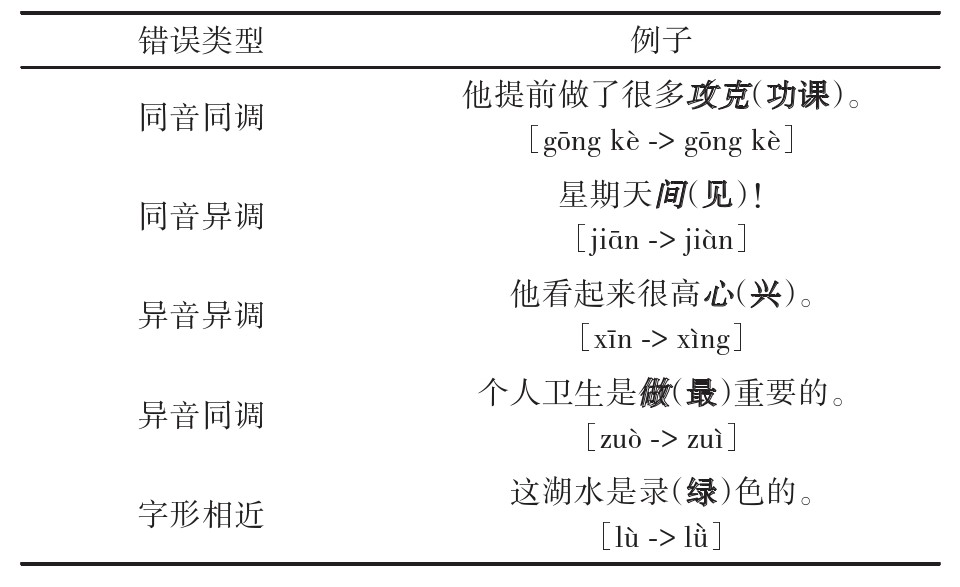

预训练语言模型BERT/RoBERTa/MacBERT等虽然能够通过预训练任务中的掩码语言模型(MLM)很好地学习字和词的语法、语义及上下文特征,但其缺乏拼写错误识别及纠正能力,且在中文拼写纠错(CSC)任务中面临预训练与下游任务微调目标不一致的问题。为了进一步提升BERT/RoBERTa/MacBERT等模型的拼写错误识别及纠正能力,提出一种面向中文拼写纠错的自监督预训练方法MASC。MASC在MLM的基础上将对被掩码字的正确值预测转换成对拼写错误字的识别和纠正。首先,MASC将MLM对字的掩码扩展为相应的全词掩码,目的是提升BERT对单词级别的语义表征学习能力;接着,利用混淆集从音调相同、音调相近和字形相近等方面对MLM中的被掩码字进行替换,并将MLM的训练目标更改为识别正确的字,从而增强了BERT的拼写错误识别及纠正能力;最后,在3个公开的CSC语料集sighan13、sighan14和sighan15上的实验结果表明,MASC可在不改变BERT/RoBERTa/MacBERT等模型结构的前提下进一步提升它们在下游CSC任务中的效果,并且消融实验也证明了全词掩码、音调和字形等信息的重要性。

苏锦钿 , 余珊珊 , 洪晓斌 . 一种面向中文拼写纠错的自监督预训练方法[J]. 华南理工大学学报(自然科学版), 2023 , 51(9) : 90 -98 . DOI: 10.12141/j.issn.1000-565X.230031

Although the pre-trained language models like BERT/RoBERTa/MacBERT can learn the grammatical, semantic and contextual features of characters and words well through the language mask model MLM pre-training task, they lack the ability to detect and correct spelling errors. What’s more, they faces the problem of inconsistency between the pre-training and downstream fine-tuning stages in Chinese spelling correction CSC task. In order to further improve BERT/RoBERTa/MacBERT’s ability of spelling error detection and correction, this paper proposed a self-supervised pre-training method MASC for CSC, which converts the prediction of masked words into recognition and correction of misspelled words on the basis of MLM. First of all, MASC expands the normal word-masking in MLM to whole word masking, aiming to improve BERT’s ability of learning semantic representation at word-level. Then, the masked words are replaced with candidate words from the aspects of the same tone, similar tone and similar shape with the help of external confusion set, and the training target is changed to recognize the correct words, thus enhancing BERT’s ability of detecting and correcting spelling errors. Finally, the experimental results on three open CSC corpora, sighan13, sighan14 and sighan15, show that MASC can further improve the effect of the pre-training language model, i.e. BERT/RoBERTA/MacBERT, in downstream CSC tasks without changing their structures. Ablation experiments also confirm the importance of whole word masking, phonetic and glyph information.

| 1 | HONG Y Z, YU X G, HE N,et al .Faspell:A fast,adaptable,simple,powerful Chinese spell checker based on dae-decoder paradigm [C]∥Proceedings of the 5th Workshop on Noisy User-Generated Text (W-NUT 2019).Hong Kong:Association for Computational Linguistics,2019:160-169. |

| 2 | WANG D M,TAY Y, ZHONG L .Confusionset-guided pointer networks for Chinese spelling check[C]∥Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics.Stroudsburg:Association for Computational Linguistics,2019:5780-5785. |

| 3 | ZHANG S, HUANG H, LIU J,et al .Spelling error correction with soft-masked BERT [C]∥Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics.Stroudsburg:Association for Computational Linguistics,2020:882-890. |

| 4 | LI C W, CHEN J J, CHANG J S .Chinese spelling check based on neural machine translation [C]∥Proceedings of the 32nd Pacific Asia Conference on Language,Information and Computation.Stroudsburg:Association for Computational Linguistics,2018:367-375. |

| 5 | VASWANI A, NARASIMHAN K, SALIMANS T,et al .Attention is all you need[C]∥Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017).California:MIT Press,2017:5998-6008. |

| 6 | DEVLIN J, CHANG M W, LEE K,et al .BERT:Pre-training of deep bidirectional transformers for language understanding [C]∥Proceedings of NAACL-HLT 2109.Minnesota:Association for Computational Linguistics,2019:4171-4186. |

| 7 | CHENG X Y, XU W D, CHEN K L .SpellGCN:Incorporating phonological and visual similarities into language models for Chinese spelling check [C]∥Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics.Stroudsburg:Association for Computational Linguistics,2020:871-881. |

| 8 | WANG B X, CHE W X, WU D Y,et al .Dynamic connected networks for Chinese spelling check[C]∥Proceedings of the Findings of the Association for Computational Linguistics:ACL-IJCNLP 2021.Stroudsburg:Association for Computational Linguistics,2021:2437-2446. |

| 9 | LI J, WU G S, YIN D F .DCSpell:A detector-corrector framework for Chinese spelling error correction [C]∥Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information.NY:ACM Press,2021:1870-1874. |

| 10 | LIU S J, YANG T, YUE T C .PLOME:Pre-training with misspelled knowledge for Chinese spelling correction [C]∥Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing.Stroudsburg:Association for Computational Linguistics,2021:2991-3000. |

| 11 | LI H, LI J J, JIANG W W,et al .HMOSpell:Phonological and morphological knowledge guided Chinese spelling check [C]∥Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing.Stroudsburg:Association for Computational Linguistics,2021:5958-5967. |

| 12 | XU H D, LI Z, ZHOU Q,et al .Read,listen,and see:Leveraging multimodal information helps Chinese spell checking [C]∥Proceedings of the Findings of the Association for Computational Linguistics:ACL-IJCNLP 2021.Stroudsburg:Association for Computational Linguistics,2021:716-728. |

| 13 | LIU Y H,OTT M, GOYAL N,et al .RoBERTa:A robustly optimized BERT pretraining approach [EB/OL].(2019-07-26)[2023-01-05].. |

| 14 | CUI Y M, CHE W X, LIU T,et al .Pre-training with whole word masking for Chinese bert [J].IEEE/ACM Transactions on Audio,Speech,and Language Processing,2021,29:3504-3514. |

| 15 | 段建勇,袁阳,王昊 .基于Transformer局部信息及语法增强架构的中文拼写纠错方法[J].北京大学学报(自然科学版),2021,57(1):61-67. |

| DUAN Jianyong, YUAN Yang, WANG Hao .Chinese spelling correction method based on transformer local information and syntax enhancement architecture [J].Acta Scientiarum Naturalium Universitatis Pekinensis(Natural Science),2021,57(1):61-67. | |

| 16 | 刘哲,殷成凤,李天瑞 .基于BERT和多特征融合嵌入的中文拼写检查[J].计算机科学,2023,50(3):282-290. |

| LIU Zhe, YIN Chengfeng, LI Tianrui .Chinese spelling check based on BERT and multi-feature fusion embedding [J].Computer Science,2023,50(3):282-290. | |

| 17 | LIU C L, LAI M H, TIEN K W,et al .Visually and phonologically similar characters in incorrect Chinese words:Analyses,identification,and applications [J].ACM Transactions on Asian Language Information Processing,2011,10(2):1-10,39. |

| 18 | ZHANG R Q, PANG C, ZHANG C Q,et al .Correcting Chinese spelling errors with phonetic pre-training [C]∥Proceedings of the Findings of the Association for Computational Linguistics:ACL-IJCNLP 2021.Stroudsburg:Association for Computational Linguistics,2021:2250-2261. |

| 19 | JI T, YAN H, QIU X P .SpellBERT:A lightweight pretrained model for Chinese spelling check [C]∥ Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing(EMNLP 2021).Stroudsburg:Association for Computational Linguistics,2021:3544-3551. |

| 20 | LAN Z Z, CHEN M D, GOODMAN S,et al .ALBERT:A lite BERT for self-supervised learning of language representations [EB/OL].(2020-03-09)[2023-01-25].. |

| 21 | WANG D M, SONG Y, LI J,et al .A hybrid approach to automatic corpus generation for Chinese spelling check [C]∥Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing.Stroudsburg:Association for Computational Linguistics,2018:2517-2527. |

| 22 | WU S H, LIU C L, LEE L H .Chinese spelling check evaluation at SIGHAN bake-off 2013 [C]∥Proceedings of the Seventh SIGHAN Workshop on Chinese Language Processing.Nagoya:Asian Federation of Natural Language Processing,2013:35-42. |

| 23 | YU L C, LEE L H, TSENG Y H,et al .Overview of SIGHAN 2014 bake-off for Chinese spelling check [C]∥Proceedings of the 3rd CIPS-SIGHAN Joint Conference on Chinese Language Processing (CLP’14).Wuhan:Association for Computational Linguistics,2014:126-132. |

| 24 | TSENG Y H, LEE L H, CHANG L P,et al .Introduction to Sighan 2015 bake-off for Chinese spelling check [C]∥Proceedings of the Eighth SIGHAN Workshop on Chinese Language Processing.Beijing:Association for Computational Linguistics,2015:32-37. |

/

| 〈 |

|

〉 |