收稿日期: 2019-06-27

修回日期: 2019-08-06

网络出版日期: 2019-12-01

基金资助

国家自然科学基金资助项目 (51975434); 新能源汽车科学与关键技术学科创新引智基地资助项目(B17034); 武汉理工大学研究生优秀学位论文培育项目 (2018-YS-033)

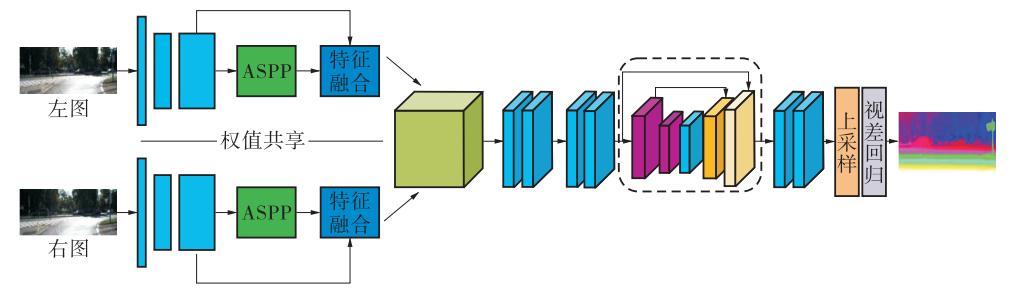

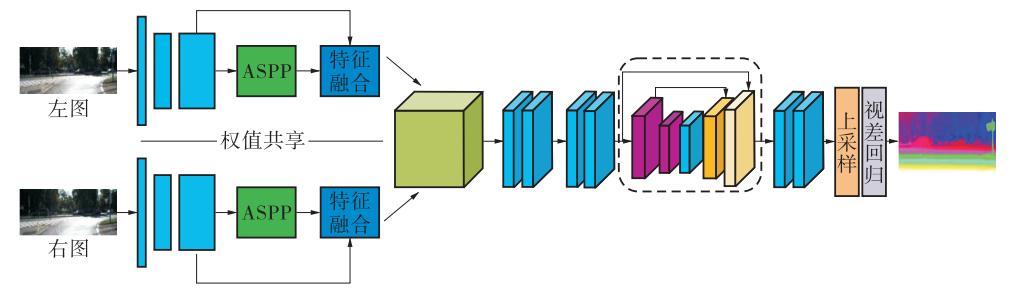

Improved Stereo Matching Algorithm Based on PSMNet

Received date: 2019-06-27

Revised date: 2019-08-06

Online published: 2019-12-01

Supported by

Supported by the National Natural Science Foundation of China (51975434)

刘建国, 冯云剑, 纪郭, 等 . 一种基于 PSMNet 改进的立体匹配算法[J]. 华南理工大学学报(自然科学版), 2020 , 48(1) : 60 -69,83 . DOI: 10.12141/j.issn.1000-565X.190388

/

| 〈 |

|

〉 |